Posts Tagged open source

Oracle/Google: the strategy behind Sun, Oracle and the OSS implications

Posted by cdaffara in blog, divertissements on August 15th, 2010

In my previous post, I tried to provide some details on what in my opinion were the most relevant legal and licensing aspects of the recently launched Oracle lawsuit against Google. I would like now to provide some perspective on what may have been the motives behind this lawsuit, and what are the possible implications for the Java and Open Source communities.

First of all, it is clear that, as I mentioned before, Google turned the lawsuit into a positive event for their (slightly battered) public image. By turning the lawsuit against Android into an attack to the open source community, Google effectively created a positive image, as David unjustly accused by the Oracle giant. It is also clear that the lawsuit itself is actually quite weak, focusing on a copyright claim that is very vague (given the fact that Google never claimed to use Java), and a set of patent claims for techniques that are probably not relevant anymore (especially in the post-Bilski era). One of the possible reasons for this is to be sure that even the widely different Dalvik machine would be at least partially covered; the other is the fact that all of Classpath was included in the OIN “System Components” covered technologies. Since both Oracle and Google are part of OIN, I suspect that Oracle wanted to avoid any potential complication coming from this “broken marriage”.

But – this is not the only relevant aspect. Actually, an angle that is probably more important is the impact of the lawsuit on Java, Java for mobile, Android and the OSS communities that were part of the Sun technology landscape.

Enterprise Java: no change at all. Java is a very strong brand among corporate developers, and I doubt that the lawsuit will change anything at all; in fact, all the various licensee of the Java full specification are on perfectly legal grounds, and face no problem at all. Despite the opportunistic claims by Miguel De Icaza (that suggests that Mono/C# would have been a better choice), there is nothing in the lawsuit that would imply that other Java or Java-related technologies may be impacted (actually, Mono and the CLR are in the same situation as Dalvik, actually).

Mobile Java: as I mentioned before, the lawsuit put the last stone on the JavaME grave. The only potentially relevant route away from the land of the dead could have been JavaFX; that was too little, too late – incomplete, missing several pieces, missing a roadmap, and uselessly released as a binary-only project. Android used the Java language, extended it with mobile-specific classes that were more modern and more useful for recent smartphones and even non-phone applications (like entertainment devices, automotive and navigation devices). It is not a surprise, that coupled with the Google brand name, Android surged in popularity so much as to become a threat.

Oracle OSS projects: Oracle has always been an opportunistic user of open source. With the term “opportunistic” I am not implying any negative connotation: simply the observation that Oracle dabbled in open source whenever there was an opportunity to reduce its own research and development costs. If you look at oracle projects, it is clear that all projects are related to infrastructural functionality for the Oracle run-time and for developers tools (using Eclipse as a basis). I was not able to find any “intrinsic” open source project started or adopted by Oracle that was not focused on this approach. So, for those projects, I believe that there will be no difference; for example, I believe that the activity on the Oracle-sponsored BTRFS project will not change significantly. Oracle, actually does not care at all if they are seen as “enemies”, or if their projects are not used anymore by others. What they care for is for their patches to be included in Linux. Remember that Oracle is an “old style” company; it does have two basic product lines: its database and its set of enterprise applications. Everything else is not quite necessary, and probably will be abandoned.

Sun OSS projects: as for Sun, there is a long preamble first. Sun has always been, first and foremost, an engineering company – something like Silicon Graphics in the past, or more recently Google. Sun had open sourced something of value whenever it was necessary to establish a common platform or protocol, like NFS or NIS+; but it was the advent of Jonathan Schwartz that actually turned things towards open source. The ponytailed CEO tried to turn the Sun behemoth towards a fully open strategy, but was unable to manage the conversion before being ousted out. It is a pity, actually – Sun could have leveraged its size, large number of technical partners and amount of technologies to become a platform provider like RedHat – but 10 times larger. The problem of this strategy is that it implies a large amount of cooperative development, and thus a substantial downsizing of the company itself. The alternative could have been the use of an open-core like strategy, for example creating a scalable JVM designed to auto-partition code execution on network of computers. The basic JVM could have been dual licensed, with the enhanced one released on a proprietary basis; this could have leveraged the exceptional Sun expertise in grid and parallel computing, filesystems and introspection systems.

But Sun never managed to complete the path – it dwindled left and right, with lots of subprojects that were started and abandoned. The embracing of PostgreSQL, its later abandonment, the latter embrace of MySQL, that was then not integrated anywhere; the creation of substantial OSS projects from their proprietary offering, but then losing interest as soon as a project started to become a threat for the proprietary edition. There is no surprise that despite the incredible potential, Sun never recouped much of their OSS investment (despite the great growth in their latest quarters, the OSS services remained a small portion of their revenues). Now that Oracle has taken control, I believe that Sun “openness” will quickly fade towards small, utilitarian projects – so, even if now everyone looks at Oracle with anger, noone at Oracle could care less.

Why oracle sued? The blogosphere is exploding with possible answers; my own two hypothesis are:

- Oracle found a substantial technology it acquired (Java) losing value in what is the hottest tech market today, namely mobile systems. Sun had no credible plan to update JavaME, no credible alternative, and thus Android (that is loosely java based) is at the same time a threat to an acquired asset and (from their point of view) a stolen technology. Since anyone can follow the same path, Oracle wants to make sure that noone else would try to leverage Java to create an unlicensed (and uncontrolled) copy.

- Oracle wants a piece of the mobile enterprise market, and the alternatives are unavailable (Apple does not want anything to do with Java, Blackberry is a JavaME licensee, Windows Mobile is backed by arch-rival Microsoft). Android is working well, grows incredibly fast, and Oracle wants a piece of it; Google probably rebuffed initial contacts, and now Oracle is showing the guns to make Google obey. I am skeptical, however, that Google would back down on what is becoming its most important growth path. The lawsuit itself is quite weak, and Google would risk too much by licensing the TCK from Oracle; they would basically destroy their opportunity for independent development. It is never a good idea to corner someone – if you leave no alternative, fight is the only answer.

I believe that the first one is the most probable one; Larry Ellison should know that cornering Google would not be sufficient to make them capitulate – they have too much to lose. But this will not be sufficient to create an opportunity for Oracle; I believe that the lawsuit will actually bring nothing to Oracle, and lots of advantages to Google. But only time will tell; the only thing that I can predict for sure right now is that Solaris will quickly fade from sight (as it will be unable to grow at the same rate of Linux) exactly like AIX and HP-UX: a mature and backroom tech, but nothing that you can base a growth strategy upon.

Oracle/Google: the patents and the implications

Posted by cdaffara in blog, divertissements on August 13th, 2010

Just as LinuxCon ended, Oracle announced that it has filed suit for patent and copyright infringement against Google for its implementation of Android; as an Oracle spokesperson said, “In developing Android, Google knowingly, directly and repeatedly infringed Oracle’s Java-related intellectual property. This lawsuit seeks appropriate remedies for their infringement … Android (including without limitation the Dalvik VM and the Android software development kit) and devices that operate Android infringe one or more claims of each of United States Patents Nos. 6,125,447; 6,192,476; 5,966,702; 7,426,720; RE38,104; 6,910,205; and 6,061,520.” (some more details in the copy of Oracle complaint). Apart from the slight cowardice of waiting after LinuxCon for announcing it, the use of the Boies Schiller legal team (the same of SCO) would be ironic on its own (someone already is calling the company SCOracle).

The patent claims are:

- Protection domains to provide security in a computer system

- Controlling access to a resource

- Method and apparatus for pre-processing and packaging class files

- System and method for dynamic preloading of classes through memory space cloning of a master runtime system process

- Method and apparatus for resolving data references in generated code

- Interpreting functions utilizing a hybrid of virtual and native machine

- Method and system for performing static initialization

Let’s skip the patent analysis for a moment, and let’s focus on the reasons behind this. Clearly, it is a move typical of mature industries: when a competitor is running past you, you try to put a wrench in its engine. That is a typical move, and one of the examples of why doing things by the book in this modern, collaborative world is wrong. Not only that, but I believe that previous actions by Sun made this threat clearly useless – even dangerous.

Let’s clear the table from the actual patent claims: the patent themselves are quite broad, and quite generic; a good example of what should not be patented (the security domain one is a good example; look at the sheet 5 and you will find the illuminating flowchart with the representation of: do you have the rights to do it? if yes, do it, if no, do nothing. How brilliant). Also, Dalvik implementation is quite different from the old JRE one, and I have strong suspicions that the actual Dalvik method is substantially different. But, that is not important. I believe that there are two main points that Oracle should have checked before filing the complaint (but, given the use of Schiller&Boies, I believe that they have still to learn from the SCO debacle): first of all, Dalvik is not Java and Google never claimed any form of Java compatibility. Second, there is a protection for patents as well, just hidden in recent history.

On the first point: in the complaint, Oracle claims that “The Android operating system software “stack” consists of Java applications running on a Java-based object-oriented application framework, and core libraries running on a “Dalvik” virtual machine (VM) that features just-in-time (JIT) compilation”. On copyrights, Oracle claims that “Without consent, authorization, approval, or license, Google knowingly, willingly, and unlawfully copied, prepared, published, and distributed Oracle America’s copyrighted work, portions thereof, or derivative works and continues to do so. Google’s Android infringes Oracle America’s copyrights in Java and Google is not licensed to do so … users of Android, including device manufacturers, must obtain and use copyrightable portions of the Java platform or works derived therefrom to manufacture and use functioning Android devices. Such use is not licensed. Google has thus induced, caused, and materially contributed to the infringing acts of others by encouraging, inducing, allowing and assisting others to use, copy, and distribute Oracle America’s copyrightable works, and works derived therefrom.”

Well, it is wrong. Wrong because Google did not copied Java – and actually never mention Java anywhere. In fact, the Android SDK produced Dalvik (not Java) bytecodes, and the decoding and execution pattern is quite different (and one of the reasons why older implementations of Dalvik were so slow – they were made to conserve memory bandwidth, that is quite limited in cell phone chipsets). The thing that Google did was to “copy” (or – for a better word – inspire) the Java language; but as the recent SAS-vs-WPS lawsuit found, “copyright in computer programs does not protect programming languages from being copied”. So, unless Oracle can find pieces of documentation that were verbatim lifted from the Sun one, I believe that the copyright part is quite weak.

As for patents, a little reminder: while copyright covers specific representations (a page of source code, an Harry Potter book, a music composition), software patents cover implementations of ideas, and if the patent is broad enough, all possible implementation of an algorithm (let’s skip for the moment the folly of giving monopoly protection on ideas. You already know how I think about it); so, if in any way Oracle had, now or in the past, given full access to those patents through a licensing that is transferable, Google is somehow protected there as well. And – guess what? That really happened! Sun released the entire Java JDK under the GPLv2+classpath exception; granting with that release full rights of use and redistribution of the IPR assigned on what was released. This is different from the TCK specification, that Google wisely never licensed; because the TCK license requires for the patents to be transferred to limit the development to enhancements or modifications to the basic JDK as released by Sun.

But, you would say, Dalvik is independent from OpenJDK, so patents are not transferred there. So, include the code that is touched by the patents from the OpenJDK within Dalvik – compile it, and make a connecting shim, include it in a way that is GPLv2 compatible. The idea (just an idea! and IANAL of course..) is that through the release of the GPL code Sun gave an implicit license to embedded patents that is connected with the code itself. So, if it is possible to create an aggregate entity of the Dalvik and OpenJDK code, the Dalvik one would become a derivative of the GPL license, and would obtain the same patent protection as well. That would be a good use of the GPL, don’t you think?

What will be the result of the lawsuit? First of all, the open source credibility of Oracle, already damaged by the OpenSolaris affair, is now destroyed. It is a pity – they have lots of good people there, both internal and through the Sun acquisition; after all, they are among the 10 largest contributors to the Linux kernel. That is something that will be very difficult to recover.

Second, Google now has a free, quite important gift: the attention has been moved from their recent net neutrality blunder, and they are again the David of the situation. I could not imagine a better gift.

Third, with this lawsuit Oracle basically announced the world that Java in mobile is dead. This was actually something that most people already knew – but seeing it in writing is always reassuring.

Update: Miguel de Icaza claims that “The Java specification patent grant patent grant seems to be only valid as long as you have a fully conformant implementation”, but that applies only to the Standard Implementation of Java, not OpenJDK. Sorry Miguel – nice try. More luck next time.

Update 2: cleaned the language on the phrase on patents, ideas and implementation that was badly worded.ù

Update 3: clarified the Dalvik+OpenJDK idea.

Estimating source-to-product costs for OSS: an experiment

Posted by cdaffara in OSS business models, OSS data on August 10th, 2010

One of my recurring themes in this blog is related to the advantages that OSS brings to the creation of new products; that is, the reduction in R&D costs through code reuse (some of my older posts: on reasons for company contribution, Why use OSS in product development, Estimating savings from OSS code reuse, or: where does the money comes from?, Another data point on OSS efficiency). I already mentioned the study by Erkko Anttila, “Open Source Software and Impact on Competitiveness: Case Study” from Helsinki University of Technology, where the author analysed the degree of reuse done by Nokia in the Maemo platform and by Apple in OSX. I have done a little experiment on my own, by asking IGEL (to which I would like to express my thanks for the courtesy and help) for the source code of their thin client line, and through inspecting the source code of the published Palm source code (available here). Of course it is not possible to inspect the code for the proprietary parts of both platforms; but through some unscientific drill-down in the binaries for IGEL, and some back of the envelope calculation for Palm I believe that the proprietary parts are less than 10% in both cases (for IGEL, less than 5% – there is a higher uncertainty for Palm).

The actual results are:

- Total published source code (without modifications) for IGEL: 1.9GB in 181 packages; total amount of patch code: 51MB in 167 files (the remaining files are not modified). Average patch size: 305KB, Patch percentage on total publisheed code: 2.68%

- Total published source code (without modifications) for Palm: 1.2GB in 106 packages; total amount of patch code: 55MB in 83 files (the remaining files are not modified). Average patch size: 664KB, Patch percentage on total published code: 4.58%

If we add the proprietary parts and the code modified we end up in the same approximate range found in the Maemo study, that is around 10% to 15% of code that is either proprietary or modified OSS directly developed by the company. IGEL reused more than 50 million lines of code, modified or developed around 1.3 million lines of code. Without OSS, that would have costed more than 2B$, required a full staffing of more than 700 people for an effort duration of more than 20 years. Through OSS, the estimated cost (using the more appropriate semidetached model) is around 90M$, with an average staffing of 150 people and an estimated project duration of 5 years. Palm has a similar cost (the amount of modified code is quite similar), but starting from a smaller amount of reused code (to recode everything would still require 12B$, 570 people and 18 years of work). We have to add some additional costs (for an explanation you can check my previous post on the proper use of COCOMO II and OSS, using the model by Abts, Boehm and Bailey) that would bring the total cost to a little less than 100M$ (still substantially less than the full cost of development from scratch).

Open Source allows to create a derived product (in both case of substantial complexity) reducing the cost of development to 1/20, the time to market to 1/4, the total staff necessary to more than 1/4, and in general reduce the cost of maintaining the product after delivery. I believe that it would be difficult, for anyone producing software today, to ignore this kind of results.

Addendum: I received some requests for specific parts of source code from people willing to check the kind of modifications performed. For Palm, the website provides both original source code and patches. For IGEL, I requested the access to the source code, and was kindly provided with a username and password to download it. Since the single most requested file seems to be the modified rdesktop, I have linked the GPL sources here.

About contributions, Canonical and adopters

Posted by cdaffara in blog, divertissements on July 30th, 2010

It is always strange to see the savage infighting that sometimes happens in the free and open source world – sometimes, like red in front of a bull, the net suddenly lights their flame-throwers and decides to roast someone. Today’s target is Canonical, makers of the Ubuntu distribution, accused of being leeches and “stealing” from the open source communities, giving little or nothing back, and profiting from that. The issue emerged from the publication of the Gnome census, where it emerged that Canonical As Sam Varghese writes, “Canonical derives the base for Ubuntu from the Debian project. It takes liberally from many free and open source software projects to produce a distribution. While this distribution is available for free download, Canonical is also basing a business on it, and developing ways and means of making money off Ubuntu.” (which is, probably, a crime). He wrote something similar before, and Greg DeKoenigsberg has an even more vitriolic argument in his post “Red Hat, 16%. Canonical, 1%”, that happily buries under the ground Canonical, Ubuntu and most Ubuntu fanboys.

Well, you’re all wrong.

Not because the percentages are wrong (but nearly useless, as Canonical is relatively recent and RedHat is not, because Gnome is only one of the projects and there are many others, etc.) but because they measure too little. I already wrote in the past about the enormous effort that goes to non-code contributions, and that no-one measures (as for OpenOffice, there are more contributors in non-code parts than in code); there is also a substantial effort in creating a product out of contributions. And Ubuntu certainly invested in product engineering, marketing, even engineering (less than Red Hat? So what? Large IT consulting companies are getting paid millions for open source-based systems, and I never saw a contribution at all). When Matt Asay claims that bringing Ubuntu to million of people is a contribution, he is claiming an absolute truth; every time Canonical manages to bring a press release out it is making a huge contribution. Maybe less code than others, but this is not a beauty contest – this is a cooperative effort for building a better future, not a race to see who is the nicest or worked harder. It is true that Canonical (I hope) profits from OSS: well, it is one of the most important thing for OSS, as it demonstrates that OSS is sustainable, that people can live off OSS services and products, all the while improving our world. I repeat: maybe someone at Red Hat is not happy of the visibility of Canonical, given all the contributions they do? I am sorry – and I am quite happy to show at all my talks that Red Hat is an incredibly good and well-managed company, that has open sourced all the proprietary products it acquired – and invests an incredible amount of effort in engineering in the open. I like them a lot (no, I don’t work for them, and never did use one of their services), but I like Canonical as well, because they are investing heavily in the desktop market, a market that is not the focus of Red Hat any more and that I believe is quite important.

This is not a contest. We should be happy for every, small, large, strange or different contributions that we receive. Should it be more? Maybe. But don’t overlook all those things that are being done, some of them outside of pure code. Because, as I wrote in the past, there is much more than code in an OSS project.

The basis of OSS business models: property and efficiency

Posted by cdaffara in OSS business models on July 26th, 2010

It is now time to write the closing part of our long multi-part look at open source business models. After all the discussion on how to look at the various parts of a model and how to improve it, I will try to summarize a bit on how to look at an OSS business model, and what implications can be made from a specific choice (for once, without mentioning open core).

The basic idea behind business models is quite simple: I have something or can do something – the “value proposition” – and it is more economical to pay me to do or get this “something” instead of doing it yourself (sometimes it may even be impossible to find alternatives, as in natural or man-made monopolies, so the idea of doing it myself may not be applicable)

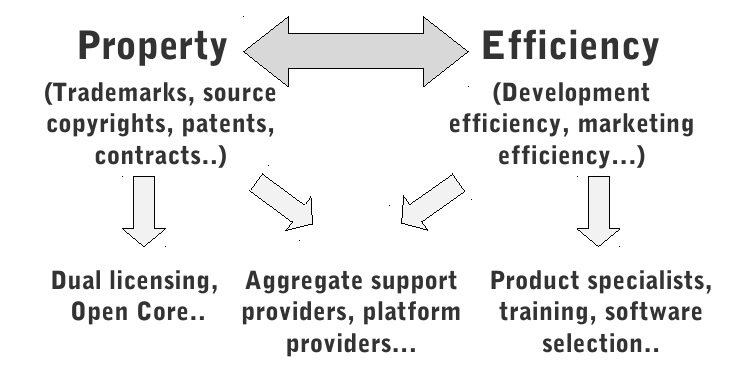

There are two possible sources for the value: a property (something that can be transferred) and efficiency (something that is inherent in what the company do, and how they do it). With Open Source, usually “property” is non-exclusive (with the exception of Open Core, where part of the code is not open at all). Other examples of property are trademarks, patents, licenses… anything that may be transferred to another entity through a contract or legal transaction.

Efficiency is the ability to perform an action with a lower cost (both tangible and intangible), and is something that follows the specialization in a work area or appears thanks to a new technology. Examples of the first are simply the decrease in time necessary to perform an action when you increase your expertise in it; the first time you install a complex system may require lots of effort, and this effort is reduced the more you experience the tasks necessary to perform the installation itself.

Examples of the second may be the introduction of a tool that simplifies the process (for example, through image cloning) and it introduces a huge discontinuity, a “jump” in the graph of efficiency versus time.

These two aspects are the basis of all the business models that we have analysed in the past; it is possible to show that all of them fall in a continuum between properties and efficiency:

Among the results of our past research project, one thing that we found is that property-based projects tend to have lower contributions from the outside, because it requires a legal transaction to become part of the company’s properties; think for example at dual licensing: to become part of the product source code, an external contributor needs to sign off his rights to the code, to allow the company to sell the enterprise version alongside the open one.

On the other hand, right-handed models based purely on efficiency tends to have higher contributions and visibility, but lower monetization rates. As I wrote many times, there is no ideal business model, but a spectrum of possible models, and companies should adapt themselves to changing market conditions and adapt their model as well. Some companies start as pure efficiency based, and build an internal property with time; some others may start as property based, and move to the other side to increase contributions and reducing the engineering effort (or enlarging the user base, to create alternative ways of monetizing users).

This is the last post in our little mini-serie on OSS business models; I hope that my archetypal three readers will have enjoyed it as much as I enjoyed writing them. Of course, I will be happy to read and respond to any comment – even negative ones.

The relationship between Open Core, dual licensing and contributions

Posted by cdaffara in OSS adoption, OSS business models on July 21st, 2010

Open Core continues to receive substantial bashing, both after the announcement of the new SugarCRM 6 and after the recent OpenStack intitiative. Sugar introduces a new interface that is not available in the open source edition (they are not the first in this: actually, Open-Xchange did it before them, making the javascript code for the new AJAX interface not usable for commercial activities), but despite this they claim “We are an open source company” In the OpenStack announcement, The Register reports that it was not possible for NASA to introduce the changes to Eucalyptus because that would have undermined the capability of the company to make users pay for the enterprise edition. I already wrote in the past that Open Core is not evil per se, but that it does introduce difficulties in encouraging external participation; both because there is a very thin line in feature selection between the community and enterprise edition, and because open core naturally hampers participation. I had some readers asking me why, and I will respond with a subset of my LinuxTag slides:

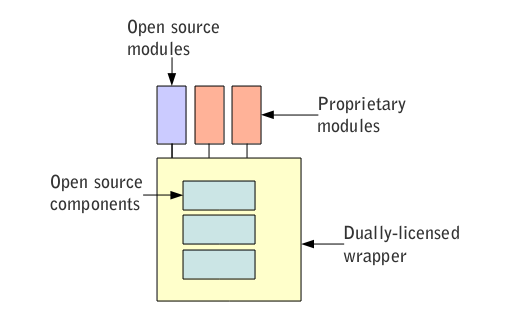

Open core is usually built by a set of internal open source components held together by a dual-licensed wrapper, plus proprietary modules on the outside. One of the best examples of this is Zimbra (an excellent product on its own) but MySQL in recent editions can be included in the same group. As discussed in previous posts, dual licensing hampers contributions because it requires an explicit agreement on ceding rights to the company that employs it, in order to be able to relicense it for the proprietary edition. This means that Open Core companies, in itself, will have an easier time in monetizing their software, but will receive much less contributions in exchange. As I wrote before, it is simply not possible to get something like Linux or Apache with Open Core.

Again: open core is not bad per se (but I would have been more cautious in calling Sugar “an open source company”, for whatever definition you have of that). But it is a tradeoff: monetization versus contributions. And, my bets are on contributions, as OpenStack demonstrates – you need leverage and external resources to go beyond what a single company can do.

The new EveryDesk and EveryDesk/MED health care desktops

We have finally released the new version of the linux-on-USB EveryDesk system, both in the plain version and the Medical release, that includes an IHE certified DICOM medical image browser, a complete R-based statistical environment and OpenOffice enhanced with a complete medical dictionary. The new version is faster, should be more compatible with older hardware, and in general was found by our beta testers to be fairly complete.

Its main appeal is that it can be tested without any installation: just download the image, copy it on the key and try. It boots fast, it is totally modifiable, provides local applications, Prism for web-apps, Chromium and several remote computing applications like the VMware View client, clients for IBM mini and mainframes, a full Java environment for Citrix, and much more.

The medical version still misses the final DICOM certification (you will see in the startup splash screen that it does have no CE marking), we are working towards the final release that will be certified and significantly improved. The R environment is also missing some modules specific to bioengineering, that were not ready in time for release; we expect to have a beta-2 version ready for the mid of august.

We have also a completely new website: http://www.everydesk.org where we added a substantial amount of material, and will be used to publish the training videos that we are preparing to help companies in adopting the desktop for their own internal use.

We have introduced a new policy: we offer unlimited and free support and helpdesk services for all users, commercial or not. To receive private answers we only as for an introductory email that provides details of the institution, contact points and the actual or expected number of EveryDesk installations. We will provide a separate customer ID, and it will be used for issue tracking. Large scale customers can request a private portal, with issue and bug tracking, device management and group update as a separate commercial option.

We are welcoming health care institutions that are interested in trying EveryDesk/MED, especially from developing countries; let us know what additional application may be of interest to be added to the default platform.

For more information: http://www.everydesk.org

An on-vacation post on Open core

Posted by cdaffara in OSS adoption, OSS business models on July 6th, 2010

[Note: since I am writing this from a sunny beach, with a cell phone, I will not be able to add more than a few links to external pages. Will add the rest of them at my return, after the 12th of July]

It seems that Open Core continues to be the source of significant debate; I wrote quite a few posts in the past, and Open Core was one of our researched business models (for more details, see my LinuxTag presentation on business models). I would like to enter again the debate with a few short comments on my own:

- Companies using OC are not the devil, and should not be called names because of their choice of business model. Actually, there are no good and bad business models - only models that work, and those that do not. So, if open core works for a company, that’s a good thing.

- Open core models are somehow confusing for adopters. As a consultant for more than 100 companies and public administrations, actually explaining open core is one of my most common tasks. And the marketing message of companies is confusing: if you go to the Zimbra webpage (no offence against Zimbra, which is a company/product I love and use as example of good practice) you see the phrase “Zimbra – the leader in open source email and collaboration”, not “Zimbra – the leader in open source and proprietary email” (not that the phrase would win any context

) and the same for all the other open core companies. This is not, in my opinion, such a negative point if the website explains the difference between versions in a simple way, as for example both Zimbra and Alfresco do.

) and the same for all the other open core companies. This is not, in my opinion, such a negative point if the website explains the difference between versions in a simple way, as for example both Zimbra and Alfresco do. - It is true that open core models tend to have a higher revenue than non-OC models. It is also true that OC does have an intrinsic limited number of contributions from outside (as we found in FLOSSMETRICS analysing a few hundreds packages), and as can be found in the mentioned LinuxTag presentation. So, you may have higher monetization ratio, but you basically forfeit external contributions. The CEO should decide what is more important – so the decision is not “ethical”, but practical and based on economics. You will never get the kind of participation that Linux, Apache and Eclipse do have in an Open Core model. If that is ok for you – that’s great.

- The fact that most VC are funding open core companies is just a data point. Lots of open source companies do well without VC funding.

- It is true that lots of people claims that “pure” open source models are not sustainable. Even my friend Erwin Tenhumberg (that is quite knowledgeable, expert and incredibly nice on its own) had a slide in this sense in his LinuxTag presentation; and you can find lots of comments like that in many publication (something like “the majority of OSS companies adopt the so called mixed model”, despite this being actually false, as we found in our survey of OSS companies). The point, like said before, is that the important thing is not that there is a superior model, but that for every company, every market there is an optimal model – it may be OC, it may be pure services, or lots of combinations of our 11 building blocks. The optimal model changes with time and market condition, and what is appropriate now may be wrong tomorrow.

- No open source model can achieve the kind of profit margins of proprietary companies. So, if you want to make your OSS company, remember this basic fact. If you want the kind of profit margins of Microsoft or Oracle, forget it.

So, to end this post, there are three critical points: whether the model is clear for the adopter (and this should be a given, and actually nowadays I would say that most companies are absolutely honest and clear on this), whether the software in its open source edition provides sufficient functionality to be useful to a wide range of adopters (and this is a fine line to walk, and requires constant adaptation) and whether the increased monetization compensates for the lack of external contributions, that can substantially increase the value of the code base (you are trading cash for code and engineering, in a sense).

Can we put this to rest? End the name calling, be friends, and call all of us family? Especially since right now, under the sun of Fuerteventura where I am writing this, it seems difficult to fight ![]()

[by the way: sorry for any misspelling. There is no spell checker here on this small screen...]

On WebM, H264, and FFmpeg implementation

Posted by cdaffara in blog, divertissements on July 6th, 2010

I was recently reminded by Gregory Maxwell of Xiph about the new, non-Google implementation of VP8 done within the context of FFmpeg, and many commenters on Slashdot observed that the fact that the implementation shares lots of code with the H264 part is further demonstration that VP8 is infriging on MPEG-LA held patents.

Actually, there is nothing in the implementation that suggests this, only the fact that some underlying alogrithms are similar (but not identical). For example, the entropy coder is quite similar, and it certainly helps to reuse some of the highly optimized librarties that are within FFMPEG, this is however no indication of patent infringement. What is certain, is the fact that I suspect that libVPX (Google implementation) will remain the “official” one, because there is no guarantee that in the alternative implementation the current IPR safeguards and efforts to avoid existing patents will be done properly. In fact, some of the obvious “missing optimizations” both in the decoder and encoder are clearly done to avoid patents, and this means that Google can be reasonably sure of being safe from IPR claims for the current code drop. If a FFMPEG developer implements some optimization (especially in the encoder) that actually implements a claimed part of a current patent you can end up with a freely implemented source code that implements an IPR-covered claim, like most of FFMPEG actually.

So, to end up this brief post: the existence of a parallel implementation of libvpx is a good thing; the fact that it shares lots of code with FFMPEG is no proof of IPR infringement, but on the other hand it is probably safer to use the libvpx one from Google, as it seems that it was developed explicitly to avoid existing IPR issues.

Some EveryDesk Use Cases

Now that our EveryDesk is out in the wild, I would like to provide a little background on what choices were made in creating it; especially outlining some differences with previous approaches. EveryDesk starts with a set of assumptions: first of all, that every single barrier reduces by an order of magnitude the probability of adoption, and that it is extremely difficult to displace “what works”, but there are lots of environments where current OSS and commercial offerings are not perfectly suited for their intended target.

I have previously addressed the use of the UTAUT model to study for example Google’s ChromiumOS offering; we applied the same model for our own desktop offering, modelled after the end of the COSPA project (one of the largest controlled experiments in the introduction of OSS in European Public Administration desktops). We have focused our initial efforts on the Health Care sector, thanks to our contract work with the regional health care agency of the Friuli region, but later generalized the approach for a wide range of activities using the same basic infrastructure.

First of all, what’s the problem of the current commercial offering?

- Hardware obsolescence: PC refresh cycles are already widely stretched thanks to the economic crisis, forcing users to adapt to less-than-modern IT infrastructures, both server and client side;

- Security: the basic security of most commercial offerings is barely adequate; to provide sufficient protection, several layers of added security software needs to be added to the basic OS, increasing resource consumption and aggravating the situation for less than modern hardware;

- Management: unless you are the lucky recipient of a fully managed (and costly) infrastructure, you will have to perform or have performed several management activities like patch and software management, backups and lots more.

Thin clients reduce management, but require substantial infrastructural investments, some applications are hard to port to Terminal Services or require substantial remotization bandwidth (or lots of additional software: think about video-conferencing in a TS environment, with all the hybrid local/remote channel enabled by tools like Citrix HDX). VDI requires even more complex systems, with an offering that is still maturing (with some stunning technical hacks, actually) and that has for many installation an unproven return on investment.

To summarize: desktop PC are flexible, adaptable, usable without connectivity, complex, fragile, difficult to manage. Thin (bitmap-based, like RDP or ICA) clients are slightly easier to manage, require little support, require substantial infrastructure investments, cannot work detached, have marginally lower management costs.

We try to strive with a middle ground solution: EveryDesk is a locally executed OS, that when configured provides the same remote management advantages of thin clients without the costly infrastructure (the only thing needed is storage, that is nowadays cheap and plentiful). The system is a real install, not a live CD, so the user/administrator can install applications or customize it in depth simply by using the image and then replicating it for all the people working in a company or administration. Updating it is simple: just execute the Update Manager!

While developing EveryDesk we identified a few potential use cases, and I would like to explain what advantage our hybrid model can have:

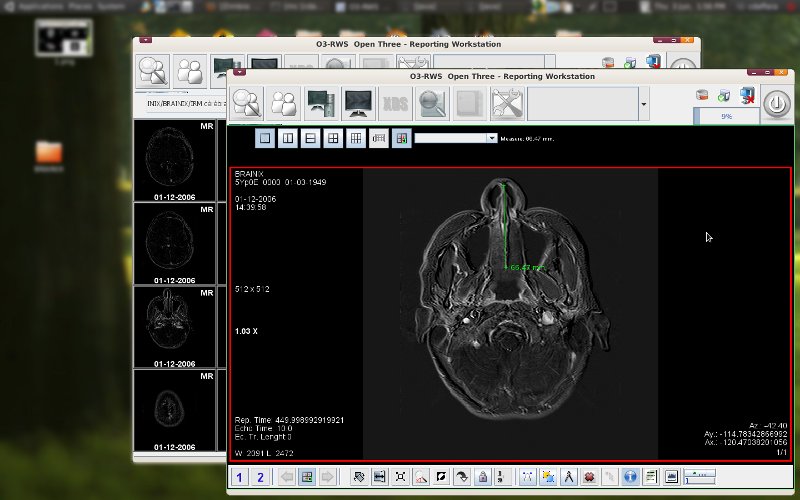

- Hospital worker: our initial use case. We designed the system so that national regulations in the handling of sensitive data could be complied to without any specific effort on the side of the user; that is, to make nearly impossible for the worker to lose or disseminate data without an explicit and voluntary breach of confidentiality, and make it possible to identify such breach immediately. By moving user data on a centrally managed server, standard logging and identity management techniques can be applied easily to prevent data loss; as no private data is on the key (including passwords), losing the key or having it stolen is not sufficient to breach the system privacy. For our health care customization we added to the basic image an excellent radiology workstation system called O3, already in use in some Italian hospitals, a medical dictionary and some ancillary tools like the ImageJ image processing system.

- Another important use case is widely found in developing countries, and is the “Internet Café”. While it is true that mobile internet access is fast becoming a fundamental infrastructure, cost and efficiency reasons still make it sensible to have a physical, shared space with PCs. EveryDesk makes it possible to provide low-maintenance PCs with no hard disks, a central low cost storage, and simply give away the USB keys to the attendees. If a key stops working, it is simply a matter of re-copying the image on top of a new one to restore everything.

- Within companies and Public Administration, providing a diskless PC with EveryDesk allows the efficient use of even old PCs (EveryDesk takes 150MB of RAM with both Firefox and OpenOffice.org open), while providing thanks to VirtualBox the set of applications that are not available within Linux. In dispersed companies, where you have multiple sites, you can use a replicating file system (like the wonderful XtreemFS developed within another EU-funded research project) that provides in a totally open source solution with differential and efficient replicas across sites. This way you can use your VirtualBox image, stop it, let the system replicate it in the other sites, move to another city, fire up EveryDesk again and have all your data and status restored without the need for local persistent storage.

The idea of a real Linux install is not new – actually, some of the ideas were explored a few years ago in a Gentoo-based system called FlashLinux, that unfortunately is not updated since 2005. We also introduced some of the ideas behind IBM SoulPad, namely the integration of virtualization within the environment, but reversed the concept (in SoulPad the virtualization layer is at the bottom, and is used to abstract the internal virtual machine from the hardware, as well as providing easy suspend/resume functionalities).

We plan to create a education-oriented edition, integrating some of the software tools already selected in projects like EduLinux; we also plan to backport some of the customizations of municipally-sponsored distributions like MAX (Madrid Linux) to try to provide a common basis for experimentation in public administrations across Europe.