Archive for category blog

About contributions, Canonical and adopters

Posted by cdaffara in blog, divertissements on July 30th, 2010

It is always strange to see the savage infighting that sometimes happens in the free and open source world – sometimes, like red in front of a bull, the net suddenly lights their flame-throwers and decides to roast someone. Today’s target is Canonical, makers of the Ubuntu distribution, accused of being leeches and “stealing” from the open source communities, giving little or nothing back, and profiting from that. The issue emerged from the publication of the Gnome census, where it emerged that Canonical As Sam Varghese writes, “Canonical derives the base for Ubuntu from the Debian project. It takes liberally from many free and open source software projects to produce a distribution. While this distribution is available for free download, Canonical is also basing a business on it, and developing ways and means of making money off Ubuntu.” (which is, probably, a crime). He wrote something similar before, and Greg DeKoenigsberg has an even more vitriolic argument in his post “Red Hat, 16%. Canonical, 1%”, that happily buries under the ground Canonical, Ubuntu and most Ubuntu fanboys.

Well, you’re all wrong.

Not because the percentages are wrong (but nearly useless, as Canonical is relatively recent and RedHat is not, because Gnome is only one of the projects and there are many others, etc.) but because they measure too little. I already wrote in the past about the enormous effort that goes to non-code contributions, and that no-one measures (as for OpenOffice, there are more contributors in non-code parts than in code); there is also a substantial effort in creating a product out of contributions. And Ubuntu certainly invested in product engineering, marketing, even engineering (less than Red Hat? So what? Large IT consulting companies are getting paid millions for open source-based systems, and I never saw a contribution at all). When Matt Asay claims that bringing Ubuntu to million of people is a contribution, he is claiming an absolute truth; every time Canonical manages to bring a press release out it is making a huge contribution. Maybe less code than others, but this is not a beauty contest – this is a cooperative effort for building a better future, not a race to see who is the nicest or worked harder. It is true that Canonical (I hope) profits from OSS: well, it is one of the most important thing for OSS, as it demonstrates that OSS is sustainable, that people can live off OSS services and products, all the while improving our world. I repeat: maybe someone at Red Hat is not happy of the visibility of Canonical, given all the contributions they do? I am sorry – and I am quite happy to show at all my talks that Red Hat is an incredibly good and well-managed company, that has open sourced all the proprietary products it acquired – and invests an incredible amount of effort in engineering in the open. I like them a lot (no, I don’t work for them, and never did use one of their services), but I like Canonical as well, because they are investing heavily in the desktop market, a market that is not the focus of Red Hat any more and that I believe is quite important.

This is not a contest. We should be happy for every, small, large, strange or different contributions that we receive. Should it be more? Maybe. But don’t overlook all those things that are being done, some of them outside of pure code. Because, as I wrote in the past, there is much more than code in an OSS project.

On WebM, H264, and FFmpeg implementation

Posted by cdaffara in blog, divertissements on July 6th, 2010

I was recently reminded by Gregory Maxwell of Xiph about the new, non-Google implementation of VP8 done within the context of FFmpeg, and many commenters on Slashdot observed that the fact that the implementation shares lots of code with the H264 part is further demonstration that VP8 is infriging on MPEG-LA held patents.

Actually, there is nothing in the implementation that suggests this, only the fact that some underlying alogrithms are similar (but not identical). For example, the entropy coder is quite similar, and it certainly helps to reuse some of the highly optimized librarties that are within FFMPEG, this is however no indication of patent infringement. What is certain, is the fact that I suspect that libVPX (Google implementation) will remain the “official” one, because there is no guarantee that in the alternative implementation the current IPR safeguards and efforts to avoid existing patents will be done properly. In fact, some of the obvious “missing optimizations” both in the decoder and encoder are clearly done to avoid patents, and this means that Google can be reasonably sure of being safe from IPR claims for the current code drop. If a FFMPEG developer implements some optimization (especially in the encoder) that actually implements a claimed part of a current patent you can end up with a freely implemented source code that implements an IPR-covered claim, like most of FFMPEG actually.

So, to end up this brief post: the existence of a parallel implementation of libvpx is a good thing; the fact that it shares lots of code with FFMPEG is no proof of IPR infringement, but on the other hand it is probably safer to use the libvpx one from Google, as it seems that it was developed explicitly to avoid existing IPR issues.

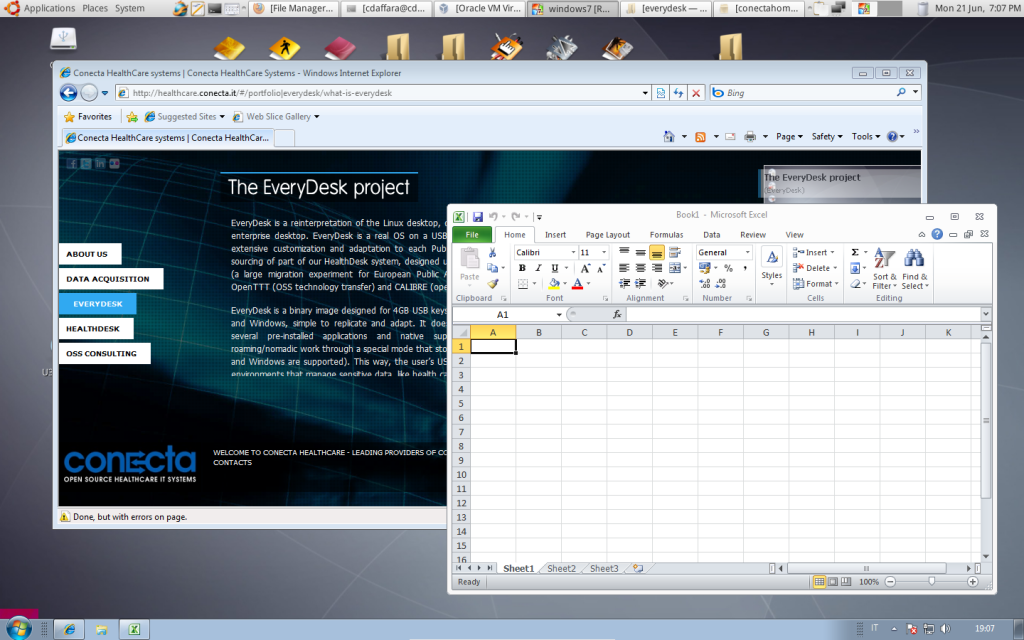

And now, for something totally different: EveryDesk!

Posted by cdaffara in OSS adoption, blog on June 21st, 2010

Now that most of our work for FLOSSMETRICS is ended, I had the opportunity to try and work on something different. As you know, I worked on bringing OSS to companies and public administration for nearly 15 years now, and I had the opportunity to work in many different projects with many different and incredible people. One of the common things that I discovered is that to increase adoption it is necessary to give every user a distinct advantage in using OSS, and to make the exploratory process easy and hassle-free.

So, we collected most of the work done in past projects, and developed a custom desktop, designed to be explorable without installation, fast and designed for real world use; EveryDesk is a reinterpretation of the Linux desktop, designed to be used in public administrations or as an enterprise desktop. EveryDesk is a real OS on a USB key, not a live CD; this way the system allows for extensive customization and adaptation to each Public Administration need It is the result of the open sourcing of part of our HealthDesk system, designed using the result of our past European projects COSPA (a large migration experiment for European Public Administrations), SPIRIT (open source health care), OpenTTT (OSS technology transfer) and CALIBRE (open source for industrial environments).

EveryDesk is a binary image designed for 4GB USB keys, easy to install with a single command both on Linux and Windows, simple to replicate and adapt. It does provide a simple and pleasing user interface, with several pre-installed applications and native support for Active Directory. EveryDesk supports roaming/nomadic work through a special mode that stores all user data on a remote SMB server (both Samba and Windows are supported). This way, the user’s USB key contains no personal data, and can be used in environments that manage sensitive data, like health care or law enforcement.

The files and images can be downloaded from the SourceForge project page.

EveryDesk integrates a simple and easy to use menu, derived from Novell usability research studies, providing one-click access to individual programs, documents, places; easy installation of new software or updates, thanks to the fully functional package manager.

EveryDesk includes support for Terminal Services, VNC, VmWare View and other remote access protocols. One peculiarity we are quite happy with is the idea of simplified VDI; basically, EveryDesk integrates the open source edition of VirtualBox, and allows for mounting the disk images remotely – so the disk storage is remote, and the execution is local. This way, VDI can be implemented by adding only storage (that is cheap and easy to manage) and avoiding all the virtualization infrastructure.

The seamless virtualization mode of VirtualBox allows for a quite good integration between Windows (especially Windows 7) and the local environment. Coupled with the fact that the desktop is small and runs in less than 100MB (with both Firefox and OpenOffice.org, it takes only 150MB) it makes for a good substitute of a traditional thin client, is manageable through CIM, and is commercially supported. Among the extensions developed, we have a complete ITIL compliant management infrastructure, and digitally-signed log storage for health care and law enforcement applications.

For more information: our health care home page, main site, on twitter, facebook, and of course here!

About software forges

Posted by cdaffara in blog, divertissements on June 17th, 2010

I had the opportunity to talk a little bit with Dirk Riehle at LinuxTag about business models, collaboration and infrastructures, and one of the arguments was about software forges, like SourceForge or GForge. I would like to provide a little bit of overview of our discussion, along with my reasoning about the future of such forges.

First of all, I am a strong believer in the idea that forges were one of the important elements for the maturation and creation of a large scale market of users and developers of open source; forges provided free, simple and no-cost infrastructure for the basic necessities of a project, like file storage, CVS, mailing lists and so on. In this sense, forges also helped in discovering software, by providing basic taxonomies of software code, and comprehensive search facilities.

But two main aspects are in my opinion reducing the potential of forges for recent projects, namely distributed development and information dissemination. One of the important evolutions in code development has been the widespread adoption of distributed version control, through Git, Bazaar, Mercurial and (to a lesser extent) other minor solutions. Git, for example, substantially increased the productivity of projects like Wine, and provide a good management framework for large scale development by nearly independent group, like in the case of the Linux kernel.

The other aspect is related to information dissemination: what happens to a project is lost between bug tracking, mailing lists, forum (why the replication of features? how to find if something was already solved in some other place?); projects are difficult to interact one with the other, with the impossibility of tracking evolution of one project from another without passing from one person in the middle subscribed to both. And, as Dirk graciously conceded, managing or adapting a forge is a real nightmare ![]() I remember our past work in the Spirit forge (a healthcare-oriented forge, that used digital certificates to authenticate and sign code entered in the platform) and still got the shivers.

I remember our past work in the Spirit forge (a healthcare-oriented forge, that used digital certificates to authenticate and sign code entered in the platform) and still got the shivers.

For this reason, I believe that future forges will be structurally different from the current ones: they will be based on small, efficient pieces, for example a central Git repo, that is enhanced by external modules that subscribe to modifications in the code stream and provide this information to higher-level applications, that for example produce graphs or link each atomic action to a wiki or tracking system. By moving things from a monolithic tool to loosely coupled pieces, we can end up with something that is more “facebook-like” than forge like, with individual apps that provide for example code quality services (like Sonar) or visualization services. I am a strong believer in a publish-subscribe mechanism for this, for example through XMPP, because it allows to solve easily the problem of how to track strongly coupled projects. For example, if my code is dependent on an external project I can subscribe to its own code announcement strams, or issue streams, since the same issues will probably apply to my code as well; this without an explicit interaction, and with the opportunity to link issues to individual actions (commits, reports, etc.) that remain valid even if I fork the library, or act independently on modifications that will eventually be merged in a single tree. I believe that in the future the number of strong or weak coupling will increase, and this will seriously limit the capabilities of current forges.

The H264 codec problem, or: we should find a better way

Posted by cdaffara in blog, divertissements on January 26th, 2010

I followed with great interest the intense debate on the initial HTML5 experiments of YouTube; given the prominent role of the video site in overall Flash usage, this has been heralded by some as a shining endorsement of HTML5, while others found its use of the H264 codec a sort of betrayal of the spirit of openness behind HTML. The reality is this has very little to do with Flash, and is related more to the now-ubiquitous role of video; despite the continuous progress of Flash in terms of technology, the browser plugin still is the blame for substantial slowness, jerkyness and overall difficulties, especially with HD video. A deeply embedded video engine is capable of better interaction with the rest of the browser paint/repaint engine, is better integrated with the internal event loop and in general can provide a better user experience at a lower CPU count.

The use of H264 video was probably due to a combination of factors: first of all the fact that Google is already an MPEG-LA licenser, meaning that the added cost will probably be very low, but more important is the overall greater maturity in terms of encoder and decoders. In fact, I believe that Theora (in its more recent implementations) can provide comparable quality to H264, as was shown by Greg Maxwell, but the encoders still need to demonstrate that the excellent quality demonstrated in Greg’s encoding are maintained for a wide range of material; in this sense, I am quite sure that in the next few months the quality differential will become very small, up to the point where Theora and H264 are more or less technically equal.

The problem is all the material, already encoded as H264, that will need to be converted. And this means that it will never happens, as the cost of doing so is higher than the cost of buying a license for H264. What will happen is that, if Flash continues to be developed outside of the main browser code, more and more content providers will prefer to use HTML5 and the open standards because this way it will be easier to provide a better quality to end-users, increasing the number of potential viewers.

This does not means that Flash will go away (as much as I would love to), as most of the functionality that is offered (outside of video) is not directly replicable in a sensible way through other means. Gordon is capable of render level 1 codes, Gnash has some level 7 codes, but in general there is no realistic way to ask for all the websites and content developers to throw out all their flash toolchests and start using something else. And there is no chance in hell that Adobe will open source their plugin (due to IPR issues, mainly). What HTML5 can do at the moment is still not sufficient to replace ActionScript and the advanced graphics features of Flash; my hope is that the advantage of being integrated directly in the browser will make it easier for developers to start targeting standards that do have a free implementation.

(Disclaimer: I have been part of ISO JTC1 for a few years, and have been working on video codecs on commercial projects from 1999 to 2005)

ChromiumOS: a look in the code, and in the model (updated)

Posted by cdaffara in blog, divertissements on November 24th, 2009

The release of Google ChromiumOS was an event waited by industry analysts with significant anticipation, and the overall impression after the announcement was that it went out as a fizzle, and not a bang. Most comments were centered on the obvious shortcomings of this first pre-alpha release, the significant limits in the supported hardware, the reliance on networking for everything (especially the initial login), the over-reliance on Google services. And all the comments are right-and, at the same time, based on a general misperception of what can be a potential competitor for the most visible part of the IT infrastructure, namely the traditional desktop PC. I had the opportunity to explore the code, build my version, and in general to evaluate the release in the context of the UTAUT model of technology adoption, and I believe that the approach is sound and sensible, and will change the market even if it fails.

The first misconception is the idea that ChromiumOS was designed as a desktop OS competitor, despite the previous comments from Google spokespersons that the release would have been targeted towards a different market. The reality is that, even in ideal conditions and with technology prevalence (that is, the new technology is invariably and clearly superior to the old one) in presence of strong network effect and market prevalence NO alternative can supplant the incumbent in a short period of time, but can eventually grow its market in small percentage increments. This is especially true if the incumbent has pricing flexibility, that is it is possible to lower prices to fight back economic advantages, by moving the dead loss to some other market sector where there is less competition. This is what happened in the netbook market, with the possible loss of market space to Linux alternatives thwarted by lowering the pricing point of the offered operating system. Google makes with ChromiumOS a technological bet, that is a clear continuation of their overall strategy, and that has a serious potential to materialize.

It is not a desktop operating system. Desktop OS are full-featured, flexible, allow for unlimited installation of applications; on the other hand, ChromiumOS is a thin shell designed to run the Chrome browser as a single application. So, everyone expecting Google to save the idea of the Linux desktop has missed the fundamental point that it is not possible for anyone to fight for the desktop and win in a short amount of time, and without a massive monetary investment. But it is always possible to create a new market, and that’s exactly what Google is trying to do; similarly to when Apple launched the iPhone, very few believed that it would reach any substantial market share, forgetting that the iPhone was not a phone, but an execution platform – something different from all the previous smartphones, for which apps and web browsing were at most an afterthought.ChromiumOS resembles in this aspect Moblin (and shares much code with it) but in an even more radical way.

It requires little or no maintenance and support. What is the single highest source of costs for PCs? Management and support. OS patching and installation/reinstallation, fixing applications, installing and removing apps, checking for malware, identity management… the list can go on forever. The real innovation in ChromiumOS is the use of an upgradeable read-only code frame, clearly mimicking set-top boxes that can upgrade themselves OTA (over the air) for example from a satellite channel. ChromiumOS is capable of managing in a transparent and secure way this upgrade, handling securely interruptions and attacks. This, coupled with a totally encrypted local store, means that the hardware can be effectively thought as a purely ephemeral device that can substituted with limited configuration needs, and that large numbers of devices can be upgraded and managed without human intervention and in total security. Applications are embedded in web pages, and managed as web pages; so the maintenance and training requirements are limited.

It is not really tied into Google. Of course in this first release it heavily uses Google services for everything; but changing that is trivial. The authentication part is managed by a PAM module that can be easily swapped, and login completion (that actually turns your login name in a gmail account) is just a small modification of the SLiM login manager used by the OS to perform the initial login, and can be changed with a few lines of code. The same for the application list (the first icon on the top left of the screen), that is merely a hardwired URL – change it with your own portal address, and you get the same result without using Google. The only part that requires some work is the integration of Google SSO (through a complex cookie exchange mechanism); augmenting that with something like OpenSSO from Sun would not require more than a few days of work anyway.

It is not a SplashTop clone. There are several Linux-based instant-on environments, designed to be integrated inside of a flash BIOS; the most famous one is SplashTop, used in many motherboards and notebooks from Asus, Acer, HP, Sony and many others. The problem of this approach is that it is “fixed”: the image is difficult to update and upgrade, and this means that it rapidly loses appeal. ChromiumOS uses a trusted boot mechanism to ensure that upgrades are legitimate, but integrates it in a clean and smart way, making sure that the users will continuously be up to date.

It does require the net most of the time, but not always. The first login requires a working connection, but then the credentials are hashed and stored in a cache wallet, that allows to enter even in absence of a connection. If the pages allow for detached operation (using Gears, HTML5 persistent storage, or similar mechanisms) the system will work even without a connection. It is a stopgag solution, but is sensible: most of the time spent in desktop applications is centered on online services that are unusable without a connection, so it makes sense when considering the OS as something that is not competing in the same market as a traditional PC. Local, cached web applications may provide in the future more flexibility in this sense, but moch effort needs to be done to make it a worthwhile path. If we consider how people spend time on the PC, we can use the data from Wakoopa, that ublished recently a measurement of time spent per application on Windows, OSX and Linux, and shows that for example on Windows the time is spent with:

- Firefox (28.71%)

- Internet Explorer (6.88%)

- Google Chrome (6.62%)

- Windows Explorer (5.92%)

- Windows Live Messenger (4.25%)

- Opera (2.97%)

- Microsoft Office Word (2.51%)

- Microsoft Office Outlook (2.22%)

- World of Warcraft (1.45%)

- Skype (1.30%)

Apart from Microsoft Word, no other application can be used without a connection; at the same time, most of the applications may be supplanted by future versions of web applications, if the evolution around HTML and related standards continue at the current pace. For games, up-and-coming standards like WebGL and O3D may provide this in a “clientless” way; this is similar to the Quake Live game, that at the moment requires an additional plug-in but that may be potentially recoded using only those standards.

It integrates digital identities better than anyone else. You login once-then, everything just works. Enterprise users with large scale SSO systems sometimes encounter this, but is not that common in consumer and smaller companies, and is a great productivity tool. It is just the beginning: more sophisticated user interfaces are needed (this one for example would be great), but many companies (including Microsoft) are making great progresses in this direction.

It introduces a different model. Desktop PC are flexible, adaptable, usable without connectivity, complex, fragile, difficult to manage. Thin (bitmap-based, like RDP or ICA) clients are slightly easier to manage, require no support, require substantial infrastructure investments, cannot work detached, have marginally lower management costs. The model adopted by Google leverages the local computing power for rendering pages, reducing back-end costs; is simpler to manage, requires no support and can integrate through plug-ins (or browser functionalities) rich functionalities, like 3D (with WebGL and O3d) or native processing (through NaCL) but always within the context of web-delivered applications.

The future will be the final judge; after all, even if something is not successful directly, it may “seed” a future evolution that is capable of shaking the market substantially. The real impact of Negroponte’s OLPC was not the machine in itself (despite the boatloads of innovations contained within) but the re-framing of the netbook market; similarly, maybe it will be not ChromiumOS that will lead the change, but I believe that it is a bold statement – in fact, much bolder than the code that was released.

See you at OMAT Rome!

I am grateful to Flavia Marzano for the invitation to being part of the roundtable on “applications and services for handling digital assets”, where I will present an overview of the tools and best practices for using open source in the context of Enterprise2.0. It is part of OMAT360, the oldest running conference on digital information management, started in 1990 and representing a wonderful opportunity to present the last results from FLOSSMETRICS.

The conference is free, with a registration page here, and an english presentation here. I would love to use the opportunity to meet anyone that may be interested in the topics, or in OSS in general.

2020 FLOSS Roadmap, 2009 Version published

Posted by cdaffara in OSS adoption, blog on October 13th, 2009

Having contributed to the new edition of the 2020 FLOSS roadmap, I am happy to forward the announcement relative to the main updates and changes of the 2020 FLOSS roadmap document. I am especially fond of the “FOSS is like a Forest” analogy, that in my opinion captures well the hidden dynamics that is created when many different projects create an effective synergy, that may be difficult to perceive for those that are not within the same “forest”.

For its first edition, Open World Forum had launched an initiative of prospective unique in the world: the 2020 FLOSS Roadmap (see 2008 version). This Roadmap is a projection of the influences that will affect FLOSS until 2020, with descriptions of all FLOSS-related trends as anticipated by an international workgroup of 40 contributors over this period of time and highlights 7 predictions and 8 recommendations. 2009 edition of Open World Forum gave place to an update of this Roadmap reflecting the evolutions noted during the last months (see OWF keynote presentation). According to Jean-Pierre Laisné, coordinator of 2020 FLOSS Roadmap and Bull Open Source Strategy: “For the first edition of the 2020 FLOSS Roadmap, we had the ambition to bring to the debate a new lighting thanks to an introspective and prospective vision. This second edition demonstrates that not only this ambition is reached but that the 2020 FLOSS Roadmap is actually a guide describing the paths towards a knowledge economy and society based on intrinsic values of FLOSS.”

About 2009 version (full printable version available here)

So far, so good: Contributors to the 2020 FLOSS Roadmap estimate that their projections are still relevant. The technological trends envisioned – including the use of FLOSS for virtualization, micro-blogging and social networking – have been confirmed. Contributors consider that their predictions about Cloud Computing may have to be revised, due to accelerating adoption of the concepts by the market. The number of mature FLOSS projects addressing all technological and organizational aspects of Cloud Computing is confirming the importance of FLOSS in this area. Actually, the future of true Open Clouds will mainly depend on convergence towards a common definition of ‘openness’ and ‘open services’.

Open Cloud Tribune: Following the various discussions and controversies around the topic “FLOSS and Cloud Computing”, this opinion column aims to nourish the debates on this issue by freely publishing the various opinions and points of view. 2009’s article questions about the impact of Cloud Computing on employment in IT.

Contradictory evolutions: While significant progress was observed in line with 2020 FLOSS Roadmap, the 2009 Synthesis highlights contradictory evolutions: the penetration of FLOSS continues, but at political level there is still some blocking. In spite of recognition from ‘intellectuals’. the alliance between security and proprietary has been reinforced, and has delayed the evolution of lawful environments. In terms of public policies, progress is variable. Except in Brazil, United Kingdom and the Netherlands, who have made notable moves, no other major stimulus for FLOSS has appeared on the radar. The 2009 Synthesis is questioning why governments are still reluctant to adopt a more voluntary ‘FLOSS attitude’. Because FLOSS supports new concepts of ’society’ and supports the links between technology and solidarity, it should be taken into account in public policies.

Two new issues: Considering what has been published in 2008, two new issues have emerged, which will need to be explored in the coming months: proprietary hardware platforms, which may slow the development of FLOSS , and proprietary data, which may create critical lock-ins even when software is free.

The global economic crisis: While the global crisis may have had a negative impact on services based businesses and services vendors specializing in FLOSS, it has proved to be an opportunity for most FLOSS vendors, who have seen their business grow significantly in 2009. When it comes to Cloud-based businesses, the facts tend to show a massive migration of applications in the coming months. Impressive growth in terms of hosting is paving the way for these migrations.

Free software and financial system: this new theme of the 2020 FLOSS Roadmap makes its appearance in the version 2009 in order to take into account the role which FLOSS can hold in a system which currently is the target of many reflexions.

Sun/Oracle: The acquisition of Sun by Oracle is seen by contributors to the 2009 Synthesis as a major event, with the potential risk that it will significantly redefine the FLOSS landscape. But while the number of major IT players is decreasing, the number of small and medium-size companies focused around FLOSS is growing rapidly. This movement is structured around technology communities and business activities, with some of the business models involved being hybrid ones.

FLOSS is like forests: The 2009 Synthesis puts forward this analogy to make it easier to understand the complexity of FLOSS through the use of a simple and rich image. Like forests and their canopies – which play host to a rich bio-diversity and diverse ecosystems – FLOSS is diverse, with multiple layers and branches both in term of technology and creation of wealth. Like a forest, FLOSS provides vital oxygen to industry. Like forests, which have brought both health and wealth throughout human history, FLOSS plays an important role in the transformation of society. Having accepted this analogy, contributors to the Roadmap subsequently identified different kind of forests: ‘old-growth forests’ or ‘primary forests’, which are pure community-based FLOSS projects such as Linux; ‘cultivated forests’, which are the professional and business-oriented projects such as Jboss and MySQL; and ‘FLOSS tree nurseries’, which are communities such as Apache, OW2 and Eclipse. And finally the ‘IKEAs’ of FLOSS are companies such as Red Hat and Google.

Ego-altruism: The 2009 Synthesis insists on the need to encourage FLOSS users to contribute to FLOSS, not for altruistic reasons, but rather for egoistical ones. It literally recommends users to only help when it benefits themselves. Thanks to FLOSS, public sector bodies, NGOs, companies, citizens, etc. have full, free and fair access to technologies enabling them to communicate on a global level. To make sure that they will always have access to these powerful tools, they have to support and participate in the sustainability of FLOSS.

New Recommendation: To reinforce these ideas, the 2020 FLOSS Roadmap in its 2009 Synthesis added to the existing list of recommendations:

Acknowledge the intrinsic value of FLOSS infrastructure for essential applications as a public knowledge asset (or ‘as knowledge commons’), and consider new means to ensure its sustainable development

Contact: http://www.2020flossroadmap.org/contact/

All the possible errors, in a single slide.

Posted by cdaffara in blog, divertissements on October 5th, 2009

I found this slide deck, from a very large and visible software company (that I will not name, leaving it as an the exercise for the reader); I believe that it was created to provide a clear response to many popular misconceptions on open source software. Unfortunately, it seems to collect in a single slide most of the myths and false assumptions that I have already mentioned in our past work within FLOSSMETRICS.

First of all, “zero cost” is something that may be true or not- it simply is not the defining attribute of open source software. At the same time, saying that proprietary software has “lower ongoing cost” is not overall true (and I have tons of independent confirmation of that), claiming that proprietary has more features is (as before) not universally true, saying that proprietary software maintains backward compatibility generated substantial laughter across the poor people here in the office that has to provide support to our commercial customers, claiming that proprietary is “more secure” recalled the recent attack against DNS claiming that it was poorly protected freeware.

Should I continue? Open standards, anyone? And the last one, implying that only proprietary software is based on managed development? Any commercial OSS vendor would happily dismiss this claim as untrue. Commitment on support? I believe that my fellow three readers would not encountering any difficulties in thinking about proprietary products that got bought and buried underground, or that simply got scrapped altogether.

Ah, I would happily send my guide to this fellow slide author, but I believe that probably this would not change this company views a single bit.

OSS: the real point is software control

Posted by cdaffara in blog, divertissements on September 30th, 2009

Ah, the morning aroma of a freshly brewed flame war… With our restless Matt Asay that sternly observes that in the free software/open source war, open source won and we are all the better for it. Of course, this joins the rack of those that consider Richard Stallman a relic of a passed era, or the thoughtful comments of my favourite thinker, Glyn Moody, or the pragmatic and reasoned views of Matthew Aslett of the 451 group.

If there is one thing that emerges clearly from all these discussions, is that fundamentalism is wrong. It is wrong when it is spelled “OSS is better”, it is wrong when it claims “Microsoft is better” without any reasoning. Because rational thinking should be the basis of discussion, not religion. This is not to say that religion or moral motivations are bad- but beliefs should be recognised beforehand, to avoid turning any discussion into a flame war. That’s why I may feel at ease in criticizing Stallman for what I perceive as personal attacks, and at the same time recognize the fact that without him and the GPL the free software and open source world would be much less developed and relevant.

My perspective is simple: every user, developer, administrator that depends on software (and basically everyone does, today) should think before using a software or service, and understand who control it, and if this “who” is not the user, what can happen. It is not just a question of “religious beliefs” but practical thinking: is the software yours? Does the service you are using gives you the opportunity of moving somewhere else? What happens if the developers are not going in the direction you need?

If we consider this as the basis for discussion, lots of arguments in the OSS/FS camp become much simpler. The crusade against software patents is a way of defending the rights of use of the end-user against arbitrary legal attacks; in this sense, the only real reason for being not happy of having something like Mono is not the fact that it is a Microsoft “standard”, but the fact that it is probably covered by unknown patents. The same thing applies for Flash- most people is dependent from a single company for what amounts as a platform, still not replicated by OSS alternatives (like Gnash) and in any case potentially covered by patents not only by Adobe, but by many other companies as well. The “victory of pragmatism” that Matt proclaims is not actually related to FS and OSS (that are the same exact thing) but the general overcoming of emotional based arguments, that is absolutely a positive thing.

But the “new pragmatism” should also be viewed with suspicion, exactly as the claims that free software is “better” without reason. I will make the example of Mono: now it is pushed as a way to overcome what is equally proprietary, that is Flash. What happens when Microsoft stops promoting it? It is OSS, s0 it can theoretically go on forever, but very few will risk infringing patents with it, and so it will remain more or less limited to those shops already using .NET elsewhere (thus having paid for the right of use), limiting its growth potential. The scenario is not so unbelievable, after the unveiling of a real Silverlight port to Moblin, that makes Mono more or less redundant. Some “open core” systems suffer of the same problem: the user is forced, by the proprietary part, to abide to whatever decision is made by the vendor, independently of what OSS license the “open” part is licensed with.

The uncritical embracing of online services is similarly flawed: what happens if the company goes bankrupt, or discontinue the service? If you use EC2, you can always create your own infrastructure using Eucalyptus and continue your work. Can you say the same of all the services that are being promoted right now? Can you get a complete copy of your data, move it somewhere else?

Control is what really matters, on-premise and online. Who, how such control is performed, what it may affects. You may prefer the ethical angle (like Stallman did) or the economic angle (like I do) but the end result is the same, exactly like free software and open source are the same. The critical aspect is being able to assess this control and weight if the lack of control is compensated by the features you get (which is reasonable) or what kind of risk are you accepting in exchange. You like the integrated set of features proposed by Microsoft? That’s good as long as you know that some of the actions that they did in the past were not exactly transparent, and that your control of their offering is very limited. You like Google? Good! Just understand what happens if Gmail does not work. You prefer open source? Good! But with the increased control you get with it, you also get responsibility and increased effort.

Always ask yourself: it is your software, or not? Think about it, and don’t let the question disappear from your mind, because your business may depend on it.