Archive for category blog

Random thoughts: TomTom, Alfresco

Posted by cdaffara in OSS business models, OSS data, blog on March 31st, 2009

Just a quick note on two relevant events in this beginning of week, first the TomTom settlement and the change in strategy of Alfresco (moving to an “open core” strategy).

As for TomTom: I am sorry for all the people that believed that the Dutch company could have been turned into a white knight of Linux, but it was clear from the start that the counter-suing was designed to create a level field for negotiation. There could be no CEO that would have fought the patent fight, understanding that in the eventuality of losing the amount of damages and remedial actions would have, effectively, killed the company. And you don’t know in advance what your chances are, and this is really one of the most dangerous aspects of patents. As for those that claimed that the FAT32 specification was effectively public, the reality is that courts rejected the patents on the original FAT implementation, but not its extensions; and that the “free to use” specification of FAT32 released by Microsoft are limited to the following areas:

“(e) Each of the license and the covenant not to sue described above shall not extend to your use of any portion of the Specification for any purpose other than (a) to create portions of an operating system (i) only as necessary to adapt such operating system so that it can directly interact with a firmware implementation of the Extensible Firmware Initiative Specification v. 1.0 (“EFI Specification”); (ii) only as necessary to emulate an implementation of the EFI Specification; and (b) to create firmware, applications, utilities and/or drivers that will be used and/or licensed for only the following purposes: (i) to install, repair and maintain hardware, firmware and portions of operating system software which are utilized in the boot process; (ii) to provide to an operating system runtime services that are specified in the EFI Specification; (iii) to diagnose and correct failures in the hardware, firmware or operating system software; (iv) to query for identification of a computer system (whether by serial numbers, asset tags, user or otherwise); (v) to perform inventory of a computer system; and (vi) to manufacture, install and setup any hardware, firmware or operating system software.”

So, hardly useable for anything within Linux. At the moment, probably the safest choice would be for embedded vendors to remove the FAT32-specific portions from the code, and use only the traditional 8.3 FAT allocation, eventually extending the use of filesystem-in-file strategy commonly used in games (like ID software’s PAKs).

As for Alfresco: first of all, I wish all the best for Alfresco and their product. It is always one of my favourite examples of successful commercial OSS system, and so I am happy to see that they are getting substantial increases in their turnover (including a nearly doubling of revenue year-over-year). On the other hand I understand perfectly the frustration that “some of the biggest enterprises in the world (and I mean Fortune 50 and even Fortune 10) are only using the open source version of the product”. It seems to me that the choice of what features should be available to enterprise customers vs. open source ones is a good initial choice, and I hope them every possible success. However, I am perplexed: if the OSS Alfresco is doing so well (doubling revenues!) is there really a need for a change in approach? And, given that RedHat is doing quite well, even with CentOS being used in many large scale companies, is it really necessary?

If the need to increase adoption was effectively so strong, I would probably have adopted a timed release for the bug fixes (effectively introducing a RHEL/Fedora like split), and spun off the plugins for connecting to the proprietary systems as a completely different offering; this way, the distinction between what is enterprise and what is open source would be clearer. Anyway: good luck, Alfresco! And continue to be my hero ![]()

I respectfully disagree.

Posted by cdaffara in OSS business models, OSS data, blog on March 25th, 2009

Microsoft has recently published a white paper on Microsoft and OSS, called “Participation in a world of choice”. It’s a nice 16-pages pdf, with kind words on the role of OSS in the modern IT landscape, the fact that “It is important to acknowledge that the relationship between open source and Microsoft has at times been characterized by strong emotions and harsh words” (some of which were from Microsofties themselves, like the infamous “cancer”), and that the future will be nice and warm and fuzzy. Despite the fact that – for probably the first time – a white paper contains a significant bibliography that includes academic papers, I have to say that I am not impressed by the content, that basically tries to reposition free software and open source in a context that is not entirely appropriate, and selectively presents a view of the market that is not in my opinion accurate.

(A note of warning: this post is not written out of “hate” of Microsoft. I am old enough to remember when IBM was the death empire (and its legal team was called “the nazguls” because they were unstoppable, fearless and devoid of human emotions), when Sun claimed that linux was a “bathub of code, … and sometimes what floats in it is not pleasant”, and at the same time I recognize the great advances that both companies made in recent years in open source. It would be my greatest pleasure to see Microsoft participate in OSS fully in the same way, but as it was for the companies I mentioned before, they have to prove themselves- and be slightly less schizophrenic in their message on OSS. End of note.)

So, I would like to point out a few things that I found in the paper:

- page 1: “… and increasing opportunities for developers to learn and create by combining community-oriented open source with traditional commercial approaches to software development.” First of all, not all open source is community oriented; here, Microsoft implies that all open source is developed in a community-oriented way (something that is not true, for example for dual-licensed software that has a much lower external participation rate, or for vendor-dominated consortia). Then, the use of “commercial” is wrong: free software and open source can be as commercial as software from the proprietary world. I would say that the copywriter here tried to suggest that OSS is “noncommercial” (something that our study , while the reality is that FLOSS in “nonproprietary”.

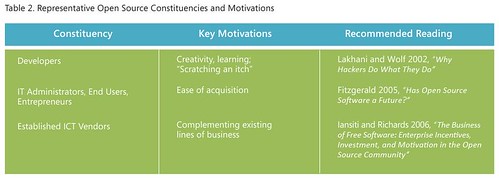

- Then we have the table in page 4:

What the present is a very limited view both of the constituencies, and the motivations for using OSS.There are many studies that present the reason for developers to participate in OSS; among them, ethical reasons, practical reasons (what is called here “scratching an itch”), self improvement, “signaling” (demonstrating capabilities in development to increase employment opportunities), and roughly 50% of the developers are paid to work on OSS. Assuming that transient reasons are the real motivations of OSS development provides a negative view (because as those personal interests may be short-lived, the software itself may be short-lived too).

- In the same table: the author places “IT Administrators, End Users, Entrepreneurs” in a single cathegory. Ok- this is not a research article, but even a novice would probably find differences among them. If the tags identify endogenous use, then having as a single advantage “ease of acquisition” is clearly a way to downplay the additional advantages of OSS. Among them, the reduced lock-in (that casually is never mentioned in the document), or reduced costs (as mentioned previously in my work on “OSS myths“, for example in the surveys by CIOinsight, or our work in the COSPA project). If the mention is for companies that use OSS inside their software (both for internal use or for commercialization) there are other demonstrations that OSS has substantial economic advantages. And where are the OSS companies? Those cannot be included in the last line, about “established ICT vendors”… They even mention themselves an example that does not fit in the table: “Apple shifted to what has been called an “embrace and layer” strategy for its consumer operating system by leveraging permissively licensed open source BSD code for functionality such as networking infrastructure, while focusing its commercial R&D on building a proprietary graphical user interface (GUI) “on top,” and licensing the resulting product as a whole under a traditional commercial license.” (In my classification, that would fit within the “R&D cost sharing”)

- Page 5: ” Another industry analyst firm, the 451 Group, identified more than 100 ICT companies who rely on OSS to generate a significant portion of their revenue. At the same time, it found “the majority of open source vendors utilize some form of commercial licensing to distribute, or generate revenue from open source software”. Of course! The error is the same already mentioned, that is the confusion between “proprietary” and “commercial”.

- Page 7: “OSS approaches tend to be relatively more successful when the end users of a technology are themselves developers, as opposed to nontechnical end users”. The phrase is incorrect, and arise from the identification of OSS developers as volunteers that “scratch an itch”. From a logical point of view, there are two errors: first of all, there are many technical users that are not developers (system administrators are a good example). In fact, the very high penetration of OSS in server environments is not strictly related to developer participation. Second, the assumption that OSS is inherently difficult to use (implied in the phrase) is easily dispelled by the great success of FireFox and OpenOffice (both of which require no “developer” in sight).

- Page 7: “Windows Server product strategy continues to focus on offering a product that IT administrators will choose over alternatives (including Linux), because it is highly manageable with readily available skills, supported by a wide range of third-party applications, and offers the lowest total cost of ownership (TCO).” This is marketing, not research- first of all, the TCO debate is still not solved in favor of Microsoft (and after reviewing the TCO numbers for COSPA, I suspect that that would not be an easy win for them). Then, it implies that OSS skills are not readily available (again, something that is unproven) and it implies that OSS alternatives have a limited range of third-party applications (look at RedHat certified applications list for a good counterexample).

- Page 8: “For developers, the entire .NET Framework is available as a reference source to enable them to debug against the source code”. And since it is not open source, this should probably not be mentioned here.

- Page 9: “One key, supporting principle is respect for the diverse—and continually evolving—ways that individuals and companies choose to build and market what they create. No efficient, effective technical solution should be precluded or advantaged because an individual, a vendor, or a development community has chosen a particular business model—whether based on software licensing, service and support, advertising, or, increasingly, some combination thereof.” This is aimed squarely at those governments that are trying to estabilish pro-OSS policies, and ignores the fact that in many cases the inherent market situation (with a de-facto monopoly) is not a balanced market in itself. Recently it was found that “Software tenders by European public administration often may not comply with EU regulations, illegally favouring proprietary applications”; so the advantage is at the moment squarely for proprietary software vendor, and the recent guidelines from the EU are designed to provide a more balanced market. My own suggestion is to evaluate the whole cost of an IT adoption using metrics that cover the full lifetime of an application; my favourite is the German WiBe model.

- Page 9: “A second key principle is a balance that preserves constructive competition and healthy incentives: when individuals and companies are rewarded for creative differentiation, customers benefit from a dynamic marketplace that offers more product choices. Incentives for commercial investment in new innovation should coexist and coevolve alongside practical mechanisms for sharing intellectual property (IP)—with the overarching focus on a dynamic industry that continues to bring great ideas to customers.” And this is for those that are asking for the abolition or the reduction in scope of software patents, and the invocation of open standards that are non-IPR encumbered. I already wrote in the past against software patents, and I believe to be not alone in claiming that the hypothetical advantage of software patents seems to pale in comparison to the extensive damage that it is causing to the industry. (And no- claiming that the thriving software ecosystem that we see now happens because of software patents should be rephrased in “the thriving software ecosystem that we see now happens despite of software patents”)

- Page 10: “We have long sought to contribute to the growth of an open ecosystem, whether through publicly documenting thousands of application programming interfaces (APIs)”. It took the European Commission, and a record fine for violation of EU monopoly laws, to abtain the release of crucial APIs…

- Page 10: “More than 500 commercial IP agreements with companies from a wide range of industries- including companies building their businesses around OSS”. Again: I would not claim that, after the substantial brouhaha in the Novell patent pact (considering that it does not covers things like OpenOffice…)

- Page 10: ” And we have stated broad openness to noncommercial OSS development through the Patent Pledge for Open Source Developers.” Nice- despite the fact that “noncommercial OSS” is an oxymoron, as placing additional redistribution limits make it non-OSS.

The paper ends with a very promising phrase: “We recognize that in the future, Microsoft ’s relationship with OSS may be punctuated by strong emotions and the possibility of interests that at times will be in conflict. But we are profoundly optimistic … will surface new opportunities for Microsoft and open source to “grow together” in purposeful and complementary ways.

I would be very happy to have Microsoft as a good OSS citizen, even with the recognition that their path may sometimes conflict (this is true also of other “fellows” like IBM or Sun, as well). But I would start with a more balanced introduction, or at least one that has not such a significant percentage of “hidden” ideas. Openness is before everything else in the mind; if your ideas are strong enough they will survive and thrive.

From theory to practice: the personal desktop linux experiment

Posted by cdaffara in OSS business models, OSS data, blog on March 3rd, 2009

In my previous post, I tried to provide a simple theoretical introduction to the UTAUT technology adoption model, and the four main parameters that govern the probability of adoption; as a complement, I will present here a small demonstration on how to use the model to improve the adoption of a specific technology, that is the user-chosen personal computer with Linux as single operating system. The reason for the “user-chosen” is related to the different adoption processes for personal computers in a personal setting (for example hobbyist, student, micro-enterprise) versus the business or public authority environment- those will be discussed in a future post. The idea for this experiment came out of a workshop I held in Manila, where I had the pleasure to discuss with the technical manager of the largest PC chain in the Philippines about how to best introduce a Linux PC into the market.

To set the context: we will present an example of an optimization exercise for the take-up of a Linux-based PC, to be distributed and used mainly for personal purposes, and acquired through direct channels like large distribution networks, computer reseller chains, individual stores. The first important point is related to the market: there are really two separate transactions, the first one from the computer manufacturer to the chain and the second from the chain to the individual users. The two markets are distinct, and have widely differing properties:

- from manufacturer to chain: there is a small number of legal agreements (the reseller/redistribution agreements), the main driver for the manufacturer is to acquire volume, while the chain is interested in profit margins for the single sale and overall volume (how fast the stock can be moved), and sales of complimentary products

- from chain to users: there is a large number of very small transactions; the main driver for the chain is to get as much margin on aggregate sales as possible (think selling the PC, the printer and associated consumables) while the buyer is looking for a specific set of functionalities at a reasonable price, like being able to browse the web, using email, writing letters and such.

What is useful for the first transaction may be useless for the second; the fundamental idea is that both transactions have to happen and be sustainable for the market to be self-sustaining and long running (a short-running market may even be negative- if a chain start selling a model and discontinues it after a little while, the end users may believe that it is no longer sold because of defects or because it was not competitive. Think for a moment about the comments after WalMart stopped selling in store the very low cost Linux PC (that is still offered online); despite the fact that no real quality issues was found, most commentators associated the end of the experiment with a general failure for Linux PCs.(as for the source of the reference to quality issues, it is extracted from the Comes-vs-Microsoft documents related to WalMart: “We understand that there has not been a customer satisfaction issue. WalMart sets fairly strict standards for customer return rates and service calls”).

So, our optimization experiment need to satisfy two constraints: guarantee a margin (eventually compounded from accessory sales) on every sale, guarantee low inventory (fast turnaround), and this means that adopters should buy with a high probability after first or second sight. Let’s recall the four parameters for adoption, and apply them for the specific situation:

- performance expectancy: is this PC fast? is it able to perform the tasks that I need?

- effort expectancy: is it easy to use?

- social influence: is it appropriate for me to use? to be shown buying it? what my peers will say when I will show it?

- facilitating conditions: is there someone that will help me in using it? will it work with my network?

Let’s start with performance expectancy. Most “Linux pc” are really very low cost, substandard machines, assembled with the overall idea that price is the only sensitive point. In this sense, while true that Linux and open source allows for far greater customizability and speed it is usually impossible to compensate for extreme speed differences; this means that to be able to satisfy the majority of users, we cannot aim for “the lowest possible price”. A good estimate of the bill of materials is the median of the lowest quartile of the price span of current PC in the market (approximately, 10% to 20% more than the lowest price). After the hardware is selected, our suggestion is to use a standard linux distribution (like Ubuntu) and add to it any necessary component that will make it work out of the box. Why a standard distribution? Because this way users will have not only a potential community of peers to ask for help, but the cost of maintaining it will be spread – as an example, most tailor-made Linux distributions for NetBooks are not appealing because they employ old version of software packages. This provides an explanation of why Dell had so much success in selling Linux netbooks compared to other vendors, with one third of the netbooks sold with plain Ubuntu. Having a standard distribution reduces costs for the technology provider, provides a safety mechanism for the reseller chain (that is not dependent on a single company) and provides the economic advantage of a cost-less license, that increase the chain margin.

Effort expectancy: what is the real expectancy of the user? Where do the user obtains his informations from? The reality is that most potential adopters get their information from peers, magazines and in many cases from in-store exploration and talks with store clerks. The clear preference that most users demonstrate towards Windows really comes from a rational reasoning based on incomplete information: the user wants to use the PC to perform some activites, he knows that to perform such activities software is needed, he knows that Windows has lots of software, so Windows is a safe bet. The appearance of Apple OS X demonstrated that this reasoning can be modified, for example by presenting a nicer user experience; OS X owners get in contact with other potential adopters, are shown a different environment that seems to be capable of performing the most important talks, and so the diffusion process can happen. For the same process to be possible with Linux, we must improve the knowledge of users, to show them that normal use is no more intimidating than that of Windows, and that software is available for the most common tasks.

This requires two separate processes: one to show that the “basic” desktop is capable of performing traditional tasks easily, and another to show what kind of software is available. My favourite way for doing this for in-store experiences is through a demo video, usually played in continuous rotation, that shows some basic activities: for example, how Network Manager provides a simple, one-click way to connect to WiFi, or how Nautilus provides previews of common file formats. There should be a fast, 5-minute section to show that basic activities can be performed easily. I prefer the following list:

- web browsing (showing compatibility with sites like FaceBook, Hi5, Google Mail)

- changing desktop properties like backgrounds or colours

- connecting to WiFi networks

- printer recognition and setup

- package installation

I know that Ubuntu (or OpenSUSE, or Fedora) users will complain that those are functionalities that are nowadays taken for granted. But consider what even technical journalist sometimes may write about Linux: “It booted like a real OS, with the familiar GUI of Windows XP and its predecessors and of the Mac OS: icons for disks and folders, a standard menu structure, and built-in support for common hardware such as networks, printers, and DVD burners.”

Booted like a real OS. And - icons!

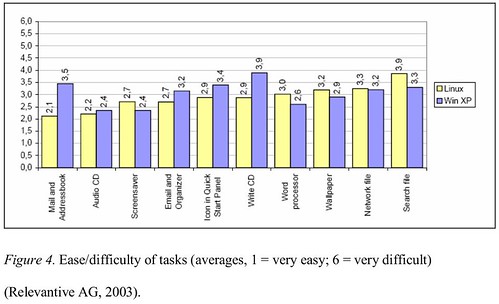

So much for the change in perspective, like the Vista user perception problem demonstrated. So, a pictorial presentation is a good media to provide an initial, fear-reducing informative presentation that will not require assistance from the shop staff. On the same side, a small informative session may be prepared (we suggested a 8-page booklet) for the assistants to provide answers comparable to that offered for Windows machines. Usability of modern linux distribution is actually good enough to be comparable to that of Windows XP on most tasks. In a thesis published in 2005, the following graph was presented, using data from previous work by Relevantive:

The time and difficulty of tasks was basically the same; most of the problems that were encountered by users were related to bad naming of the applications: “The main usability problems with the Linux desktop system were clarity of the icons and the naming of the applications. Applications did not include anything concerning their function in their name. This made it really hard for users to find the right application they were looking for.” This approach was substantially improved in recent desktop releases, adding a suffix to most applications (for example, “GIMP image editor” instead of “GIMP”). As an additional result, the following were the subjective questionnaire results:

- 87% of the Linux test participants enjoyed working with the test system (XP: 90%)

- 78% of the Linux test participants believed they would be able to deal with the new system quickly (XP: 80%).

- 80% of the Linux test participants said that they would need a maximum of one week to achieve the same competency as on their current system (XP: 85%).

- 92% of the Linux test participants rated the use of the computers as easy (XP: 95%).

This provides evidence than, when properly presented, a Linux desktop can provide a good end-user experience.

The other important part is related to applications: two to five screenshots for every major application will provide an initial perception that the machine is equally capable of performing the most common tasks; and equally important is the fact that such applications need to be pre-installed and ready to use. And with ready to use, I mean with all the potential enhancements that are available but not installed, like the extended GIMP plugin collection that is available under Ubuntu as gimp-plugin-registry, or the various thesauri and cliparts for OpenOffice.org. A similar activity may be performed with regards to games, that should be already installed and available for the end user. Some installers for the most requested games may be added using wine (through a pre-loader and installer like PlayOnLinux); we found that in recent Wine builds performance is quite good, and in general better than that of proprietary repackaging like Cedega.

One suggestion that we added is to have a separate set of repository from which to update the various packages, to allow for pre-testing of package upgrades before they reach the end users. This, for example, would allow for the creation of alternate packages (outside of the Ubuntu main repositories) that guarantee the functionality of the various hardware part even if the upstream driver changes (like it recently happened with the inclusion of the new Atheros driver line in the kernel, that complicated the upgrade process for netbooks with this kind of hardware chipset). The cost and complexity of this activity is actually fairly low, requiring mainly bandwidth and storage (something that in the time of Amazon and cloud computing has a much lower impact) and limited human intervention.

The next variable is social acceptance, and is much more nuanced and difficult to assess; it also changes in a significant way from country to country, so it is more difficult for me to provide simple indications. One aspect that we found quite effective is the addition, on the side of the machine, of a simple hologram (similar to that offered by proprietary software vendor) to indicate a legitimate origin of the software. We found that a significant percentage of potential users looked actually in the back or the side of the machine to see if such a feature was present, fearing that the machine could possibly be loaded with pirated software. Another important aspect is related to the message that is correlated to the acquisition: one common error is to mark the machine as “the lowest cost”, a fact that provides two negative messages: the fact that the machine is somehow for “poors”, and the fact that value (a complex, multidimensional variable) is collapsed only on price, making it difficult to provide the message that the machine is really more about “value for money” than “money”. This is similar to how Toyota invaded the US car market, by focusing both on low cost and quality, and making sure that value was perceived in every moment of the transaction, from when the potential customer entered the show room to when the car was bought. In fact, it would be better to have a combined pricing that is slightly higher than the lowest possible price, to make sure that there is a psychological “anchoring”.

While price sensitive users are, along with “enthusiasts”, those that up to now drove the adoption of Linux on the desktop, it is necessary to extend this market to the more general population; this means that purely “price-based” approaches are not effective anymore.

As for the last aspect, facilitating conditions, the main hurdle perceived is the lack of immediate assistance by peers (something that is nearly guaranteed with Windows, thanks to the large installed base). So, a feature that we suggested is the addition of an “instant chat” icon on the desktop to ask for help, and brings back a set of web pages with some of the most commonly asked questions and links to online fora. The real need for such a feature is somehow reduced by the fact that the hardware is preintegrated and that pre-testing is performed before package update, but is a powerful psychological reassurance, and should receive a central point in the desktop. Equally important the inclusion of non-electronic documentation, that allows for easy “browsing” before the beginning of a computing session. A very good example is the linux starter pack, an introductory magazine-like guide that can be considered as an example.

We discovered that plain, well built Linux desktops are generally well accepted, with limited difficulties; most users after 4weeks are proficient and generally happy of their new user environment.

Random walks and Microsoft

Posted by cdaffara in OSS business models, OSS data, blog, divertissements on February 26th, 2009

Sometimes talking about Microsoft and Open Source software is difficult, because it seems to have many heads, looking into different directions. At the Stanford Accel Symposium, Bob Muglia, president of Microsoft’s Server and Tools Business was bold enough to say that at some point, “At some point, almost all our product(s) will have open source in (them)…If MySQL (or) Linux do a better job for you, of course you should use those products“. Of course, we all know that; even Steve Ballmer mentioned that “I agree that no single company can create all the hardware and software. Openness is central because it’s the foundation of choice“; a fact for which Matt Asay commented with some irony that openness claims are mainly directed towards competitors like Apple and its iTunes/iPod offer.

I would like just to point out to one of the Comes vs. Microsoft exhibits (that are sometimes more interesting than your average John Grisham or Stephen King novels) where we can find such pearls of openness and freedom of choice:

From: Peter Wise Sent: Monday, October 07, 2002 9:43 AM To: Server Platform Leadership Team Subject: CompHot Escalation Team Summary - Month of September 2002 CompHot Escalation Team Summary - Month of September 2002 Microsoft Confidential Observations and Issues * Linux infestations are being uncovered in many of our large accounts as part of the escalation engagements. People on the escalation team have gone into AXA, Ford, WalMart, the US Army, and other large enterprises, where they've helped block one Linux threat, only to have it pop up in other parts of the businesses. At General Electric alone, at least five major pilots have been identified, as well as a new "Center of Excellence for Linux" at GE Capitol.

“Infestation” is not exactly the word I would use to express the idea of “customer choice”, but you know how the software world is a battle zone. I am so relieved to see that they are now really perceiving open source as part of their ecosystem.

Transparency and dependability for external partners

Posted by cdaffara in OSS business models, OSS data, blog on February 25th, 2009

As a consultant, it happens frequently to answer questions about “what makes open source better”. Not only for some adopter, but for companies and integrators that form a large network ecosystem, that (up to now) had only proprietary software vendors as source of software and technology. Many IT projects had to “integrate” and create workarounds for bugs in proprietary components, because no feedback on status was available. Mary Jo Foley writes on the lack of feedback to beta testers from Microsoft:

“During a peak week in January we (the Windows dev team) were receiving one Send Feedback report every 15 seconds for an entire week, and to date we’ve received well over 500,000 of these reports.”

Microsoft has “fixes in the pipeline for nearly 2,000 bugs in Windows code (not in third party drivers or applications) that caused crashes or hangs.”

That’s great. Microsoft is getting a lot of feedback about Windows 7. What kind of feedback are testers getting from the team in return? Very little. I get lots of e-mail from testers asking me whether Microsoft has fixed specific bugs that have been reported on various comment boards and Web sites. I have no idea, and neither do they. (emphasis mine)

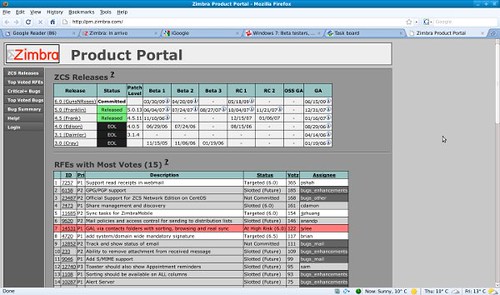

Open source, if well managed, is radically different; I had a conversation with a customer just a few minutes ago, asking for specifics on a bug encountered in Zimbra, answered simply by forwarding the link to the Zimbra dashboard:

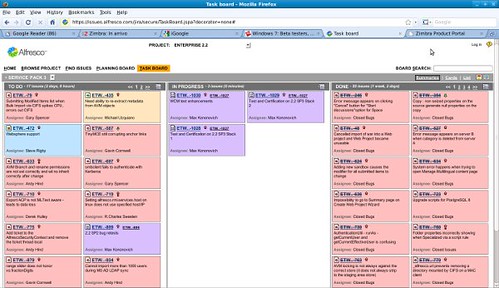

Not to be outdone, Alfresco has a similar openness:

Or one of my favourite examples, OpenBravo. Transparency pays becuase it provides a direct handle on development, and provides a feedback channel for the (eventual) network of partners or consultancies that “are living” off an open source product. This kind of transparency is becoming more and more important in our IT landscape, because time constraints and visibility of development are becoming even more important than pure monetary considerations- and allows for adopters to eventually plan for alternative solutions depending on the individual risks and effort estimates.

Hello world!

Posted by cdaffara in OSS business models, OSS data, blog on February 17th, 2009

Welcome to our public blog, where we will try to provide a window on the research activities that we do in the field of open source business models and OSS economics. Most of our work is oriented towards helping our customers in the evaluation and adoption of OSS (and sometimes helping companies in offering OSS-based services), so the focus will be clearly oriented towards commercialization and business aspects, and less

on technical aspects; I hope that you will enjoy our effort and I invite any people interested in this research area to transform this blog into a conversation and discussion on what is still a wide open research space.